Incidents are used for the negative consequences of an action. The incident comes from an action that fails to result in the expected outcome. For instance, deploying a code to production to add a new feature to improve performance. It then takes down the whole service. This is an unexpected outcome. The incident learning is the part where we uncover underlying problems that lead to the incident. The more severe the incident, the more learning to come. Nevertheless, incidents without severe consequences happen more often than incidents with severe ones. Therefore, it’s essential to drive learnings from these minor incidents and share them with a wider audience.

Multiple Causes

Incidents occur with many different causes. There isn’t generally one reason that causes the incident. There might be several factors resulting in the incident such as non-functioning alerts, incomplete/incorrect runbooks, unclear direction, and more. More importantly, the reason for debriefs about incidents is to uncover these causes and learn from them.

Investigation

The investigation isn’t about fixing the problem. It’s about uncovering where things went wrong, what we found difficult, surprising, or challenging, and what we know now that we didn’t know/realize before. It’s a process to learn about potential risks. It’s also not about mitigation of these problems. It’s simply the detection of such problems that lead to an incident or incidents and challenges during incident recovery.

Language

It’s crucial to use good language. Incidents never occur due to someone’s mistake. They occur due to organizational problems, support, and risk mitigation. Instead of asking why questions we might use better language. Why questions might put someone on the defense. Why didn’t you use the X version of the Y library? We can rather ask the same question as follows. During the incident, you have used the P version of the library Y. Tell us why you made this decision. Psychological safety is essential to uncover these learnings. We should promote it as often as possible.

What went wrong?

We should uncover what went wrong for the incident not only from a technical perspective but also non-technical perspective. For instance, if someone woke up in the middle of the night, it would be a contributing factor to the incident. We should focus on the parts that went wrong. We shouldn’t talk about some conceptual scenario. If we had done X, then it would do Y. This scenario didn’t occur and it doesn’t help in building the learning so we should spend no time in it. Some interesting questions around what went wrong are as follows.

- What did actually happen?

- Which components were involved?

- What decisions did we have to make?

- How did we make those decisions?

- Did we try something that had worked for us before?

What went right?

We naturally spend quite a good time on what went wrong. Nevertheless, we should probably celebrate what went right. Perhaps, our systems semi-recovered, or some of the measures we took earlier worked. Therefore, we should promote these learnings. They are positive learnings that we might want to share with the incident reader.

Classifying Incidents

With many minor incidents happening, it gets hard to know what to focus on for engineering health purposes. Thus, it’s essential to come up with a classification framework to find out the areas that the team/s should focus on. Once the classification is ready, we can potentially start labeling incidents with these classifications to find them out easily both in incident documents and tickets. Classification can have different angles. Here’s a classification matrix for tasting purposes. I classified incidents on two dimensions: severity and labels. An incident can have one type of severity. Nonetheless, it can have one or more labels. In the following matrix, I have 3 colored incidents with different severities.

| Severity | ||||

| Sev 1 | Sev 2 | Sev 3 | ||

| Labels | Lack of Testing | |||

| External Dependency | ||||

| Configuration Change | ||||

| System Software Failure | ||||

| Hardware Failure | ||||

| Exceeding Scaling Limits | ||||

Turning Learnings into Competitive Advantage

A key piece is to generate insights from incidents for the organization. We want to reduce technical errors but we can’t achieve it with a good strategy. We want to eliminate paths to lead to the next incident, not the incident that happened. Therefore the incident analysis isn’t about the incident itself, it’s about stopping the next incident. Netflix’s chaos monkey approach came out of these incidents. They changed the company strategy to live with hiccups rather than trying to mitigate them. Things can go wrong. Therefore, we should make them go wrong. Chaos monkey became a well-known technique in the industry.

In some cases, people start to rush deploying many services/changes to production. There might be many minor incidents that can go unnoticed. Nevertheless, it might be wise to look at the patterns where incidents happen. Perhaps, they happen during holidays time because there are fewer people. Fewer people work on various activities e.g. design reviews, code reviews, and so on. Hence, an organizational insight might be around putting extra effort around these times to not get things wrong. For instance, Amazon didn’t allow pushing changes to production before black Friday. It might not be optimal but this has been learned from previous incidents. It simply isn’t worth losing customer trust.

Incidents can happen for various different reasons that aren’t obvious. Capturing those reasons should be part of the incident learning. Incidents might occur due to under-resourced teams. The team has less time to design or review their output. On the flip side, some teams have degraded morale due to various factors. These dynamics might not be obvious if we only think about the technical aspect of things. The learning here might be just acknowledging team is under-resourced or has poor morale. This insight might help management to fill the missing roles and perhaps bring team-jelling activities.

As in these examples, we should try to turn past learnings into a competitive advantage such as hiring, releasing, and bringing new techniques. We though need to look at incidents from different perspectives to get these insights. Insights then should turn into devising strategies and procedures that enable the rapid restoration of your system and data in the event of a catastrophic failure or disruption. Incident learning should guide these plans. You should consider data backup methods, infrastructure redundancy, and clear recovery procedures.

Highlighting Key Learnings

In the past years, there have been some incidents publicly shared such as the GitLab.com database incident. Gitlab shared a postmortem document where they went through the incident. The incident debrief goes through a timeline, root cause analysis and later improving recovery procedures. Nevertheless, it doesn’t call out the learning explicitly. I suggest calling out learnings vocally.

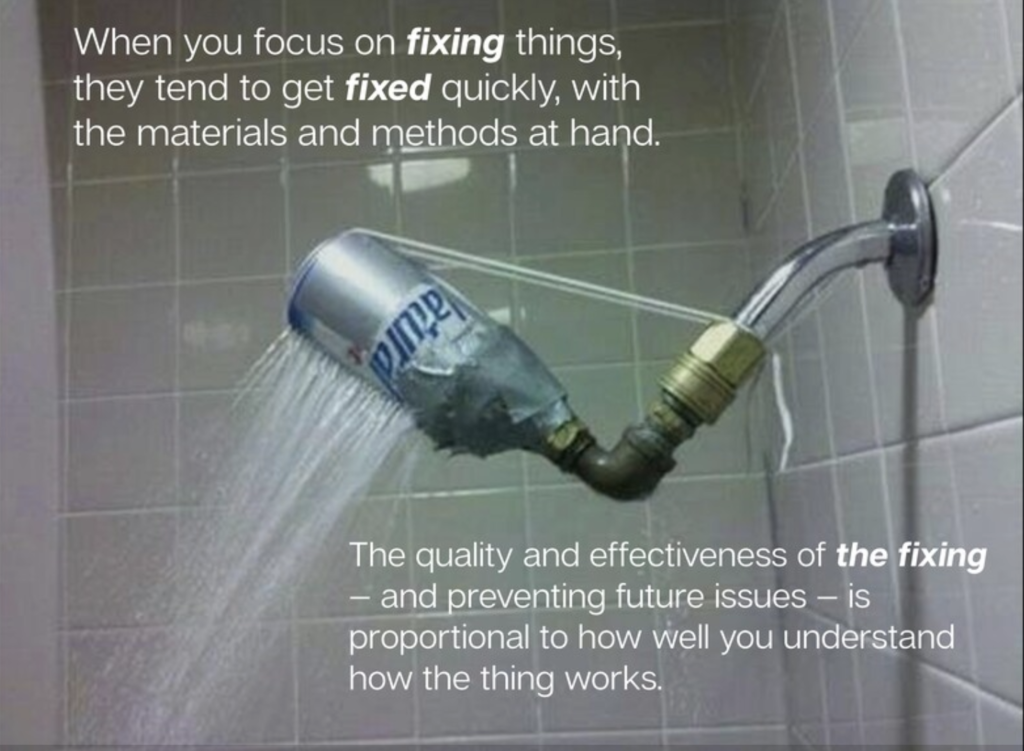

Learning is Different than Fixing

One of the missed opportunities is to overlook the learning piece. When we focus on fixing things then we might lose the opportunity to find deeper causes. We will fix the issue with the materials and methods we have at hand. Nevertheless, the fix might become suboptimal or temporary. Therefore, we should rather focus on the key findings and learnings. Rushing to a fix isn’t the intent of incident learning meetings.

Promoting Learnings

Incident meetings are about learnings. Therefore, we should promote learning pieces. Looking at the incident debrief document, the key learning parts are often neglected or come at last. I think the learning part has to be the focus. I would love it to be literally the first thing a reader sees. If I’m outside of the organization and I don’t have enough context, give me some learning that I/my team can reflect on. If I’m more interested then I can go ahead and read the rest of the document. What’s more, some postmortem documents don’t even have key learning. Nobody documented it or perhaps didn’t focus on it. We should actively promote the writing key learning part for each incident debrief document.

Conclusion

Incident postmortem/debriefs are opportunities for organizations to learn and promote incident learning. In doing so, we need to think about learning at each phase such as investigation. As an organization, taking a step back and seeing these incidents from different perspectives can give a competitive advantage for the business. Perhaps, a monthly newsletter for learnings from incidents might help the organization to learn and reflect on them. Furthermore, a general look at the incidents can detect causes of incidents that aren’t solely technical. In consequence, consolidating minor incident learnings into actionable insights can happen through the focus on the learnings.

References

Book: The Field Guide to Understanding ‘Human Error’

Paper: Learning from error: The influence of error incident characteristics

Article: Learning from Incidents

Blog: Incident Analysis: Your Organization’s Secret Weapon

Book: Operations Anti-Patterns, DevOps Solutions

Blog: Learning from incidents: from ‘what went wrong?’ to ‘what went right?’

Video: Incident Analysis: How *Learning* is Different Than *Fixing*