A service overload happens when a service receives more incoming requests than it can reasonably respond to. There are many reasons that service can get overloaded. A few examples are a sudden surge in traffic, a change in the service configuration, attacks by malign actors, and more. In the event of a service overload, the service starts to behave differently. It starts returning HTTP error codes, responds with extended latency, denies new connections, returns partial content, and so forth.

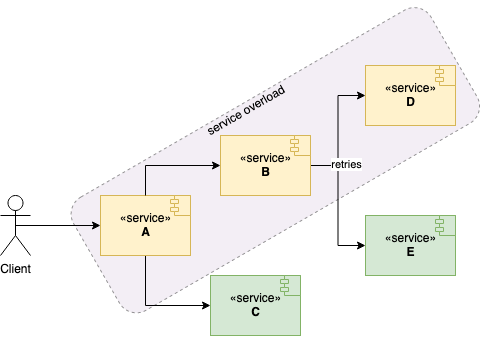

A service overload in the chain of services might mean overloading the rest of the business. If services don’t build a robust strategy against service overload, then one or two services can take down the whole application. One service failing to respond might cause clients to retry the service. Later, service clients may end up requesting more due to retries. The overloaded service might become completely unavailable. Consequently, it results in a service blackout. What’s worse, a single service can take down services it depends on. Consecutively, it can take the whole application down. To mitigate service overload problems, we might want to introduce strategies such as throttling, load shedding, and brownout.

Throttling

The throttling is a mechanism to control the usage of a service. Throttling introduces a cap for a client. There are two ways to define a cap. The first one is API level throttling which sets a cap for a certain API path. The second one is service level throttling which sets a service-wide cap. For both types of caps, the service simply rejects new requests from the same client when clients reach the cap. Typically, it means the service will return HTTP 429 – Too Many Requests. A sample code for Node.js is as follows where we limit user API access.

const rateLimit = require("express-rate-limit");

const limiter = rateLimit({

windowMs: 15 * 60 * 1000, // 15 minutes

max: 100, // Limit each client to 100 requests per window

standardHeaders: true // Return rate limit info in the `RateLimit-*` headers

});

app.use("/api/user/", limiter);Load Shedding

Load shedding is a mechanism to start rejecting excess requests by clients to focus on requests that the service can reasonably serve. In the world of elastic computing, services can scale horizontally when they are configured. Nevertheless, no service can scale indefinitely. Shedding excess requests can keep the service more available. When a service approaches its limit, it’s better to serve a portion of requests with low latency. Typically, the load shedding mechanism will kick in if a threshold is crossed for a given metric e.g. latency. Then, a server will send an HTTP 503, Service Unavailable response. The service can instruct clients to retry the service after some time by retry-after header.

Brown Out

Browning out is a mechanism to keep serving clients with bare minimum computation when the service hits its capacity. Imagine a service that returns a product catalog for the user on the front page. The service depends on two more services. One of them returns previously bought products. The other one serves recommended products. If the service hits its capacity, then we will try to compute less. For instance, we will just call the service with previously bought products. The service will send a response with extra headers indicating it has browned out and retry after. In the above diagram, service A depends on service B and service C. Perhaps, service A can keep serving by just leveraging service C as it’s still able to keep up with the demand. A sample code would be as follows for browning out.

const previouslyBoughtProducts = await getPreviouslyBoughtProducts();

if (SERVICE_IS_AT_CAPACITY) {

response.set({ "X-Brownout-retry": "5000" });

response.send({ items: previouslyBoughtProducts });

} else {

const recommendedProducts = await getRecommendedProducts();

const mergedProducts = mergeProducts(

previouslyBoughtProducts,

recommendedProducts

);

response.send({ items: mergedProducts });

}Conclusion

In this post, I’ve gone through some of the strategies to cope with service overload. These are some guards against legitimate traffic. They mitigate the overload problem to a certain extent. After hitting max capacity, the service will be overwhelmed and blackout. These strategies though would delay the blackout as long as they can. Without any of these strategies employed, services will be very vulnerable to sudden spikes. Elastic computing might help but may not be sufficient. Thus, it’s crucial to have a safety net for services.

References

Article: What is API Throttling?